2023年10月18-20日,图灵奖获得者Yoshua Bengio和姚期智、加州大学伯克利分校教授Stuart Russell以及清华大学智能产业研究院(AIR)院长张亚勤联合召集了来自中国、美国、英国、加拿大和其他欧洲国家的20多位顶尖AI科学家和治理专家,齐聚英国牛津郡迪奇利公园(Ditchley Park),进行了为期三天的首届“人工智能安全国际对话”(International Dialogue on AI Safety),部分与会者签署了一份联合声明:呼吁“在人工智能安全研究与治理上的全球协同行动,是避免不受控制的前沿人工智能发展为全人类带来不可容忍的风险的关键。”

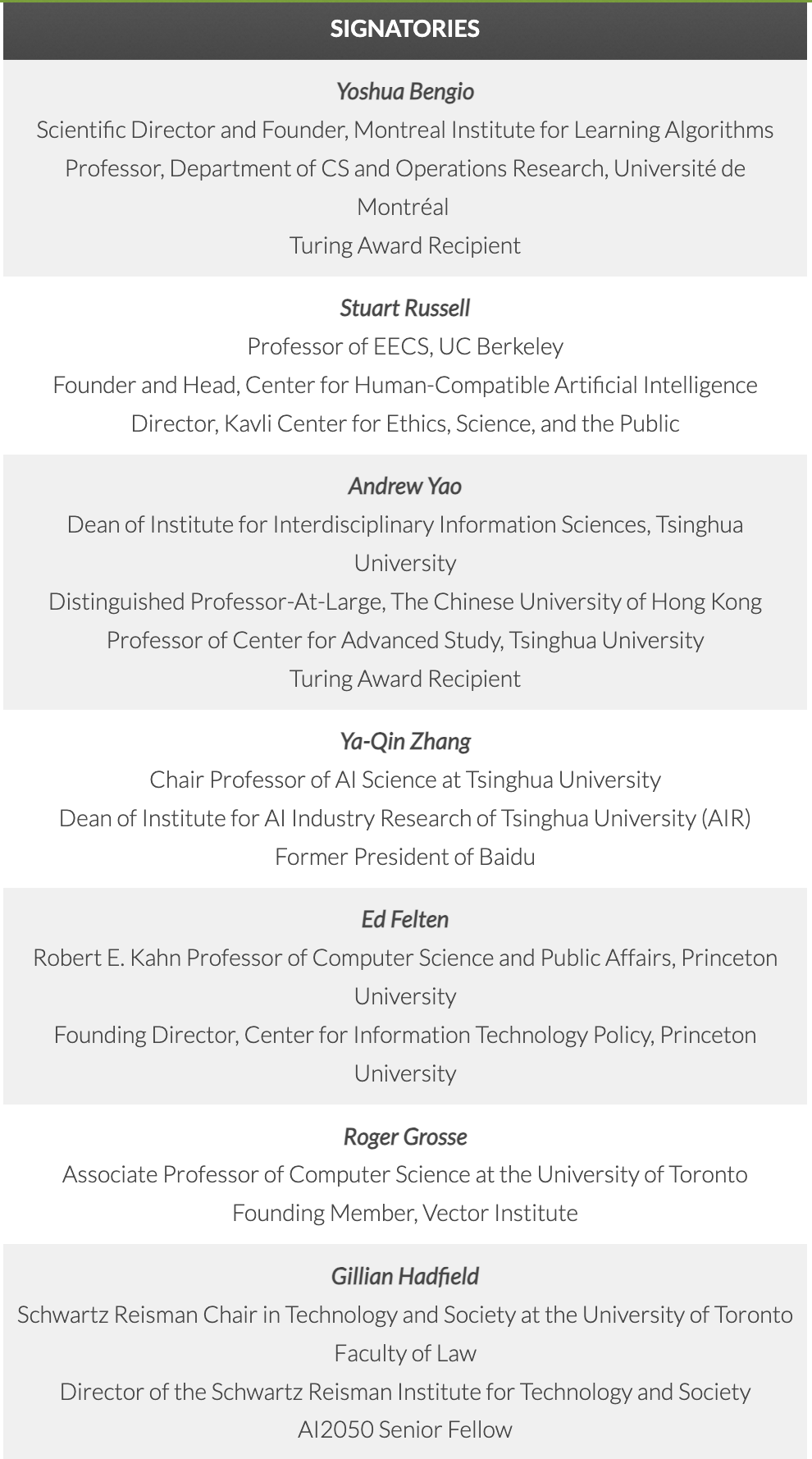

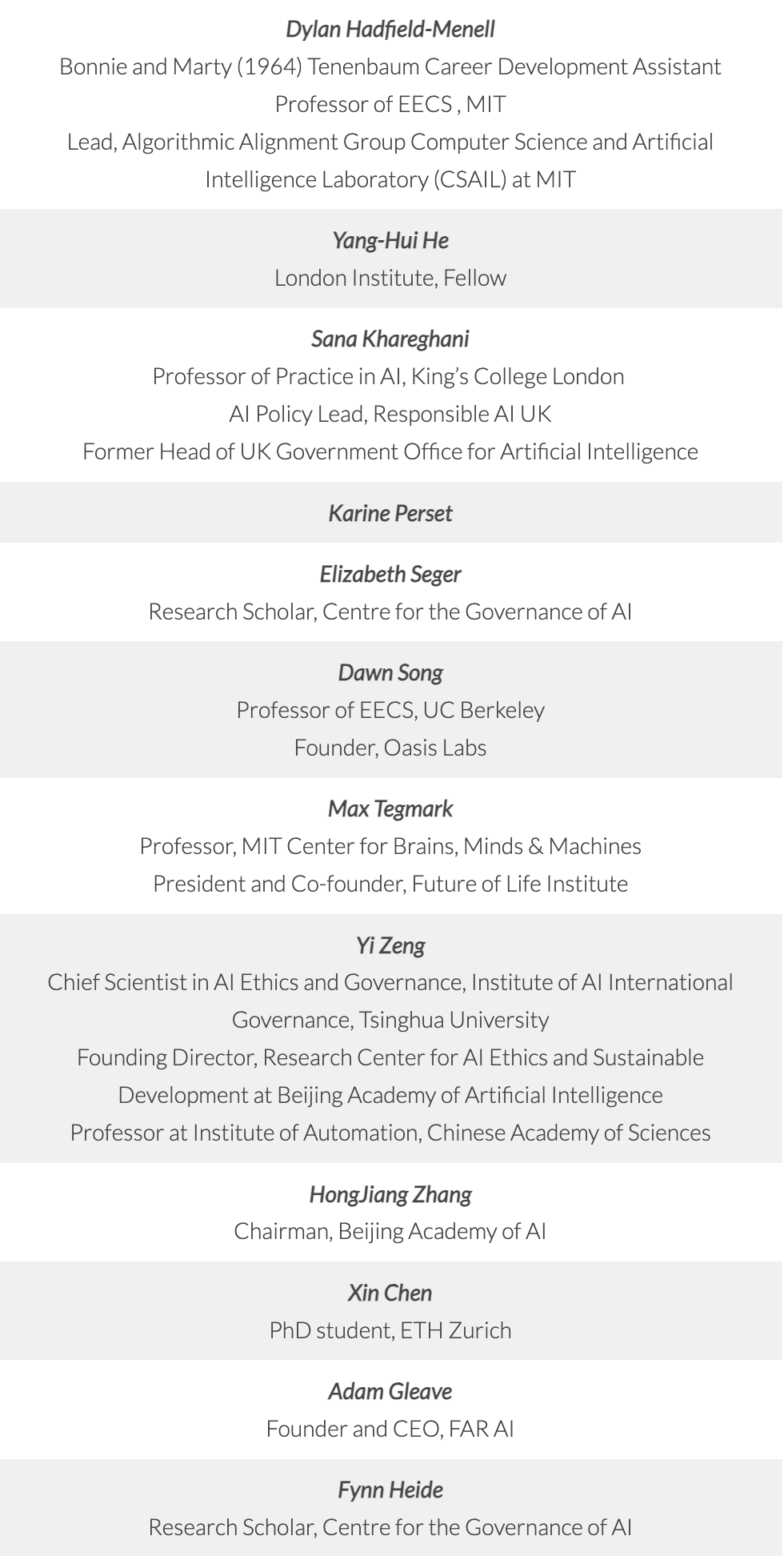

该声明的合署者包括:

图灵奖获得者、“深度学习三巨头”之一(Yoshua Bengio)

图灵奖获得者、中国科学院院士、清华大学交叉信息研究院院长(姚期智)

中国工程院院士、清华大学智能产业研究院(AIR)院长(张亚勤)

美国国家工程院外籍院士、北京智源人工智能研究院顾问、清华AIR卓越访问教授(张宏江)

中国科学院自动化研究所类脑认知智能实验室负责人和人工智能伦理与治理研究中心主任、研究员(曾毅)

人工智能标准教科书的作者(Stuart Russell)

人工智能安全和隐私领域被引用次数最多的学者(Dawn Song)

未来生命研究所创始人、MIT教授、《生命3.0》作者(Max Tegmark)

……

在人工智能安全研究与治理上的全球协同行动,是避免不受控制的前沿人工智能发展为全人类带来不可容忍的风险的关键。

全球的行动、合作与能力建设,是管理人工智能风险、使全人类共享人工智能发展成果的关键。人工智能安全是全球的共同利益,应得到公共和私人投资的支持,将安全相关进展广泛共享。世界各国政府——尤其是人工智能的领先国家——有责任制定措施,以避免恶意或不负责任的参与者造成最坏的后果,并遏制鲁莽的竞争。国际社会应针对此问题,共同建立一个针对前沿人工智能的国际协调过程。

恶意滥用前沿人工智能系统的风险已近在咫尺:开发者目前所采取的安全措施能被轻易攻破。前沿人工智能系统能创造令人信服的错误信息,并可能很快能够帮助恐怖分子开发大规模杀伤性武器。此外,未来的人工智能系统可能完全脱离人类的控制,亦存在重大的风险。即使是与人类对齐的人工智能系统,也可能破坏或削弱现有的社会机制。综合考虑,我们相信在未来的几十年内,人工智能将对全人类构成生存性风险。

在政府监管中,我们建议对超过某些能力阈值的人工智能系统,包括其开源的副本和衍生品,在建立、销售与模型使用上进行强制注册,为政府提供关键但目前缺失的对新兴风险的可见性。政府应监测大型数据中心并追踪人工智能事故,要求前沿人工智能模型的开发者接受独立第三方审计,对其信息安全和模型安全进行评估。人工智能开发者还应被要求向相关当局提供全面的风险评估、风险管理策略,以及在第三方评估和部署后对系统行为的预测。

我们同时建议规定一些明确的红线,并建立快速且安全的终止程序。一旦某个人工智能系统超越此红线,该系统及其所有的副本须被立即关闭。各国政府应合作建立并维持这一能力。此外,在最前沿的模型训练期间与部署前,开发者必须向监管机构证明其系统不会越过这些红线,以获得监管机构的批准。

让前沿人工智能做到充分安全,仍需要重大的研究进展。前沿人工智能系统必须明确地与其设计者的意图、社会规范与价值观相对齐。它们还需在恶意攻击及罕见的故障模式下保持鲁棒。我们必须确保这些系统有充分的人类控制。全球研究社区在人工智能及其他学科上的协作与努力,是至关重要的:我们需要一个致力于人工智能安全研究和治理机构的全球网络。我们呼吁领先的人工智能开发者承诺至少将三分之一的人工智能研发经费用于人工智能安全研究,同时呼吁政府机构至少以同等比例资助学术与非营利性的人工智能安全与治理研究。

以下是联合声明英文原文:

Coordinated global action on AI safety research and governance is critical to prevent uncontrolled frontier AI development from posing unacceptable risks to humanity.

Global action, cooperation, and capacity building are key to managing risk from AI and enabling humanity to share in its benefits. AI safety is a global public good that should be supported by public and private investment, with advances in safety shared widely. Governments around the world — especially of leading AI nations — have a responsibility to develop measures to prevent worst-case outcomes from malicious or careless actors and to rein in reckless competition. The international community should work to create an international coordination process for advanced AI in this vein.

We face near-term risks from malicious actors misusing frontier AI systems, with current safety filters integrated by developers easily bypassed. Frontier AI systems produce compelling misinformation and may soon be capable enough to help terrorists develop weapons of mass destruction. Moreover, there is a serious risk that future AI systems may escape human control altogether. Even aligned AI systems could destabilize or disempower existing institutions. Taken together, we believe AI may pose an existential risk to humanity in the coming decades.

In domestic regulation, we recommend mandatory registration for the creation, sale or use of models above a certain capability threshold, including open-source copies and derivatives, to enable governments to acquire critical and currently missing visibility into emerging risks. Governments should monitor large-scale data centers and track AI incidents, and should require that AI developers of frontier models be subject to independent third-party audits evaluating their information security and model safety. AI developers should also be required to share comprehensive risk assessments, policies around risk management, and predictions about their systems’ behavior in third party evaluations and post-deployment with relevant authorities.

We also recommend defining clear red lines that, if crossed, mandate immediate termination of an AI system — including all copies — through rapid and safe shut-down procedures. Governments should cooperate to instantiate and preserve this capacity. Moreover, prior to deployment as well as during training for the most advanced models, developers should demonstrate to regulators’ satisfaction that their system(s) will not cross these red lines.

Reaching adequate safety levels for advanced AI will also require immense research progress. Advanced AI systems must be demonstrably aligned with their designer’s intent, as well as appropriate norms and values. They must also be robust against both malicious actors and rare failure modes. Sufficient human control needs to be ensured for these systems. Concerted effort by the global research community in both AI and other disciplines is essential; we need a global network of dedicated AI safety research and governance institutions. We call on leading AI developers to make a minimum spending commitment of one third of their AI R&D on AI safety and for government agencies to fund academic and non-profit AI safety and governance research in at least the same proportion.

联合声明官方网站:https://humancompatible.ai/?p=4695